I realize Marc Andreessen’s Techno-Optimist Manifesto is old news, but it seems to have made some waves, and I keep hearing people talking about it, so I finally decided to dig in and read it.

Basically, the Manifesto is Andreessen spending about 5000 words spelling out his values and his vision for the future of humanity. Having written more than a few 5000 word screeds myself, I sympathize. Pretty much everything I write here on Windypundit is shaped by my core values and beliefs, and from time to time I’ve been tempted to write a self-important “What I Believe!” manifesto.

So far, I’ve resisted. Marc Andreessen has not.

Most of The Techno-Optimist Manifesto is a summary of common classical liberal economic talking points — technology makes us richer, markets are good, the future is bright. It’s the sort of thing you might get if Milton Friedman and Deirdre McCloskey had written it together[1]I would love to hear what Deirdre McCloskey thinks of the Techno-Optimist Manifesto.. To that extent, I have no objection to the basic thrust of most of the Manifesto.

Which is not to say Andreessen gets everything right. For example, in what is otherwise a fairly economically literate document, this paragraph is filled with nonsense.

We believe markets also increase societal well being by generating work in which people can productively engage. We believe a Universal Basic Income would turn people into zoo animals to be farmed by the state. Man was not meant to be farmed; man was meant to be useful, to be productive, to be proud.

First of all, it’s not work that makes our lives better. Work is an unfortunate necessity. It’s the products of work that really matter. In primitive economies, we consume those work products ourselves — food, shelter, and clothing. In more advanced trading economies, we exchange those work products with other people to get what we want. In our modern industrial economy, some of our work products are tools and factories which make other work more efficient, resulting in yet more work products to trade and consume.

Second, I am mystified by the characterization of Universal Basic Income as turning people into “zoo animals to be farmed by the state.” Andreessen seems to be implying that giving people a guaranteed income will somehow harm them by depriving them of the glories of being “useful” and “productive.” But UBI deprives its recipients of nothing. Anybody would still be free to work if they wanted to.

Granted, there are certainly reasons to be concerned about the taxpayers who pay to fund UBI payments, but no one seriously questions that the people receiving UBI will be better off for it. They are getting free money to spend on anything they want. (Or they can save it for later.) I don’t understand how anyone who advocates so strongly for free markets could think that enabling people to buy more stuff will harm them.

Third, I am very skeptical about expression of concern that people receiving welfare payments will become “dependent.” I don’t think I’ve ever heard that sentiment expressed by someone who has actually received welfare. “I had to stop taking the free money because it was making me dependent” says no one ever. I suspect this argument is mostly used as cover for simply not wanting to pay taxes to fund welfare programs. The tell is that you never hear concerns about making people “dependent” when the money comes from charitable donations instead of taxes.

But the real problems with the Techno-Optimist Manifesto are less technical and more about some really optimistic assumptions.

We believe in accelerationism – the conscious and deliberate propulsion of technological development – to ensure the fulfillment of the Law of Accelerating Returns. To ensure the techno-capital upward spiral continues forever.

Forever is a long, long time for anything to continue.

We believe intelligence is in an upward spiral – first, as more smart people around the world are recruited into the techno-capital machine; second, as people form symbiotic relationships with machines into new cybernetic systems such as companies and networks; third, as Artificial Intelligence ramps up the capabilities of our machines and ourselves.

We believe we are poised for an intelligence takeoff that will expand our capabilities to unimagined heights.

What Andreessen is talking about here is a conceptualization of the human future commonly called the singularity. The theory behind it goes something like this:

- Humans are smart enough to create all kinds of advanced technology.

- The more technology we have, the faster we create even more technology.

- This process will continue to accelerate until we have god-like super technology and everything is wonderful.

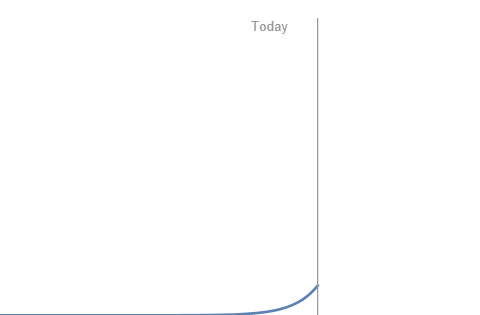

To be fair, there is some evidence for at least the first two steps of this process. If you look at almost any measure of human well-being and growth, the history shows a long period of stagnation followed by a sudden spurt of growth, something like this:

If we take a broad view of human civilization, for most of our history since the end of the last ice age, not much has changed. We almost immediately began the neolithic revolution — the development of agriculture, along with domestication of animals, pottery, and housing — but not much happened after that. At least not in terms of life-changing advances in technology.

Then about 300 years ago…something changed. We began a period of ever-more-rapid technological advancement, giving rise to the curve above.

Now if we try to picture the future, some people argue that the rate of progress is proportional to the amount of progress so far, and therefore we are at the beginning of an exponential curve that will take our civilization to stunning heights very quickly:

At present, we cannot imagine what such an amazing world would be like, but surely it will be wonderful for everyone, and we should do whatever is necessary to get there, right? (I’ll get back to that.)

Andreessen asserts that Artificial Intelligence will be a huge part of this process. He doesn’t spell it out, but elsewhere I have seen the theory expressed like this: If we humans can create an AI that is smarter than us — as some AI-ish technology is in certain areas — then certainly that AI should be able to create an AI that is smarter than it to an even greater degree. Then that AI should be able to get us to an even smarter AI…and so on, until we are as gods.

We believe Artificial Intelligence is our alchemy, our Philosopher’s Stone – we are literally making sand think.

We believe Artificial Intelligence is best thought of as a universal problem solver. And we have a lot of problems to solve.

We believe Artificial Intelligence can save lives – if we let it. Medicine, among many other fields, is in the stone age compared to what we can achieve with joined human and machine intelligence working on new cures. There are scores of common causes of death that can be fixed with AI, from car crashes to pandemics to wartime friendly fire.

All of this…just seems unlikely. You just don’t see this kind of thing in nature, at least not for very long. Giraffe necks are long because it gives them an advantage in finding food to eat,[2]Or maybe in mating. The details are a bit hazy. but we never got Giraffes with 50-foot high necks. Some combination of engineering, biology, and resource limits led to diminishing returns to neck length, and Giraffe necks stopped getting longer.

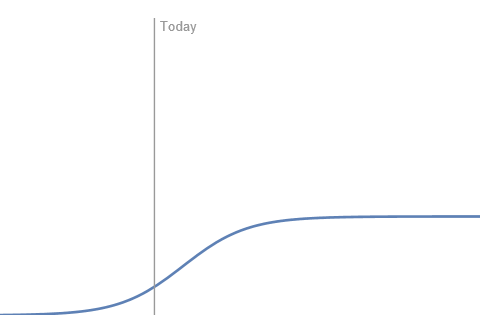

Nature has its limits. Blue whales got big, but only so big. Cheetahs got fast, but only so fast. Even basic biological reproduction, a process known to follow an exponential curve, eventually runs out of resources and levels off. Rabbits have children quickly, but we don’t have infinite rabbits. Things eventually level off.

I can’t see any reason why technological progress, even if AI driven, won’t eventually run into limits — resource limits, physical limits, computation limits, something — and level off. The out-of-control splendor of the singularity is fun to think about — it’s given us some great science fiction — but like everything else in nature, the growth of our civilization will probably reach an equilibrium. In nature, most things don’t follow exponential curves. They follow S-curves. I think the future of human welfare is going to be like this:

That’s not to any particular scale, of course. I have no way of knowing how good things will get. And to be clear, I don’t think of this as a dismal future. Barring some disaster, my gut feeling is that the peak of human flourishing will be pretty amazing. And I do believe some forms of artificial intelligence, broadly defined, will play a pivotal role.

That said, this is some kind of nonsense:

We believe any deceleration of AI will cost lives. Deaths that were preventable by the AI that was prevented from existing is a form of murder.

The flipside of this argument is that some AI systems will kill people. The mass casualty AI disaster that everyone talks about is basically the Skynet scenario: A powerful AI gets out of control and decides it wants to kill a lot of people. But AI systems don’t have to achieve consciousness to kill people. All they have to do is fail.

Self-driving cars are already killing people in accidents, with varying degrees of liability. Industrial robots have been killing people for 45 years. Computer-guided cruise missiles have been killing people for decades, mostly by design, but a few have gone astray. The military is planning even more AI-controlled systems in the future, which bring even more chances of fatal AI-mishaps. It’s not hard to imagine a future AI-driven pharmaceutical plant producing the wrong flu vaccine, or an AI shipping management system getting its priorities confused and failing to deliver enough food to all of New England.

Many new technologies are dangerous until we learn what can go wrong with them, and I see no reason to think the powerful AI systems of the future will be different.

(I think even the Skynet scenario is worth worrying about, although I’m less worried about being killed by rogue military robots than being killed by well-functioning military robots obeying the commands of a madman.)

In any case, whatever AI doom you think more likely, I don’t think we can ignore the risk that AI will kill people, and at times we will have to proceed cautiously. It may be prudent to postpone the benefits of AI in order to avoid AI disasters. This is not the one-sided choice Andreessen makes it out to be. We need to navigate a careful tradeoff.

Footnotes

Leave a Reply